- 您現(xiàn)在的位置:買賣IC網(wǎng) > PDF目錄359243 > MVTX2601 (Zarlink Semiconductor Inc.) Unmanaged 24-Port 10/100 Mbps Ethernet Switch PDF資料下載

參數(shù)資料

| 型號: | MVTX2601 |

| 廠商: | Zarlink Semiconductor Inc. |

| 英文描述: | Unmanaged 24-Port 10/100 Mbps Ethernet Switch |

| 中文描述: | 非托管的24端口10/100 Mbps以太網(wǎng)交換機(jī) |

| 文件頁數(shù): | 24/91頁 |

| 文件大小: | 686K |

| 代理商: | MVTX2601 |

第1頁第2頁第3頁第4頁第5頁第6頁第7頁第8頁第9頁第10頁第11頁第12頁第13頁第14頁第15頁第16頁第17頁第18頁第19頁第20頁第21頁第22頁第23頁當(dāng)前第24頁第25頁第26頁第27頁第28頁第29頁第30頁第31頁第32頁第33頁第34頁第35頁第36頁第37頁第38頁第39頁第40頁第41頁第42頁第43頁第44頁第45頁第46頁第47頁第48頁第49頁第50頁第51頁第52頁第53頁第54頁第55頁第56頁第57頁第58頁第59頁第60頁第61頁第62頁第63頁第64頁第65頁第66頁第67頁第68頁第69頁第70頁第71頁第72頁第73頁第74頁第75頁第76頁第77頁第78頁第79頁第80頁第81頁第82頁第83頁第84頁第85頁第86頁第87頁第88頁第89頁第90頁第91頁

MVTX2601

Data Sheet

24

Zarlink Semiconductor Inc.

7.4 Strict Priority and Best Effort

When strict priority is part of the scheduling algorithm, if a queue has even one frame to transmit, it goes first. Two

of our four QoS configurations include strict priority queues. The goal is for strict priority classes to be used for IETF

expedited forwarding (EF), where performance guarantees are required. As we have indicated, it is important that

strict priority traffic be either policed or implicitly bounded, so as to keep from harming other traffic classes.

When best effort is part of the scheduling algorithm, a queue only receives bandwidth when none of the other

classes have any traffic to offer. Two of our four QoS configurations include best effort queues. The goal is for best

effort classes to be used for non-essential traffic, because we provide no assurances about best effort performance.

However, in a typical network setting, much best effort traffic will indeed be transmitted and with an adequate

degree of expediency.

Because we do not provide any delay assurances for best effort traffic, we do not enforce latency by dropping best

effort traffic. Furthermore, because we assume that strict priority traffic is carefully controlled before entering the

MVTX2601, we do not enforce a fair bandwidth partition by dropping strict priority traffic. To summarize, dropping to

enforce bandwidth or delay does not apply to strict priority or best effort queues. We only drop frames from best

effort and strict priority queues when global buffer resources become scarce.

7.5 Weighted Fair Queuing

In some environments – for example, in an environment in which delay assurances are not required, but precise

bandwidth partitioning on small time scales is essential, WFQ may be preferable to a delay-bounded scheduling

discipline. The MVTX2601 provides the user with a WFQ option with the understanding that delay assurances can

not be provided if the incoming traffic pattern is uncontrolled. The user sets four WFQ “weights” such that all

weights are whole numbers and sum to 64. This provides per-class bandwidth partitioning with error within 2%.

In WFQ mode, though we do not assure frame latency, the MVTX2601 still retains a set of dropping rules that helps

to prevent congestion and trigger higher level protocol end-to-end flow control.

As before, when strict priority is combined with WFQ, we do not have special dropping rules for the strict priority

queues, because the input traffic pattern is assumed to be carefully controlled at a prior stage. However, we do

indeed drop frames from SP queues for global buffer management purposes. In addition, queue P0 for a 10/100

port are treated as best effort from a dropping perspective, though they still are assured a percentage of bandwidth

from a WFQ scheduling perspective. What this means is that these particular queues are only affected by dropping

when the global buffer count becomes low.

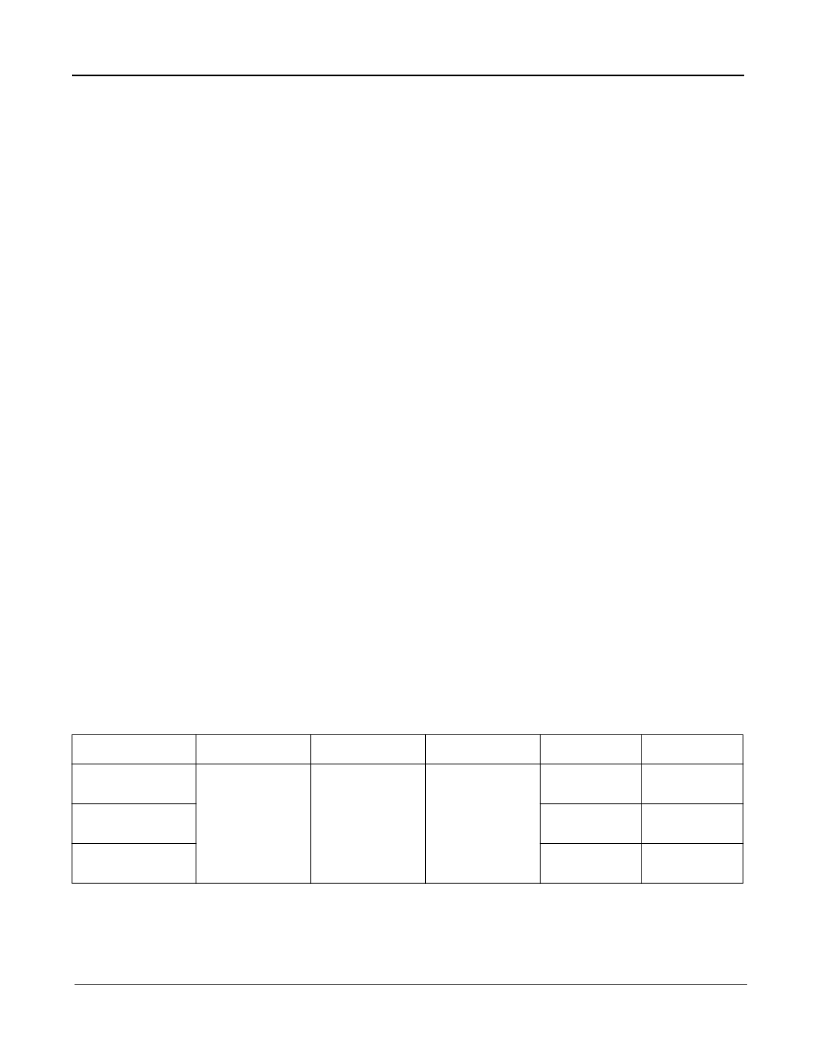

7.6 WRED Drop Threshold Management Support

To avoid congestion, the Weighted Random Early Detection (WRED) logic drops packets according to specified

parameters. The following table summarizes the behavior of the WRED logic.

Table 7 - WRED Drop Thresholds

Px is the total byte count, in the priority queue x. The WRED logic has three drop levels, depending on the value of

N, which is based on the number of bytes in the priority queues. If delay bound scheduling is used, N equals

P3*16+P2*4+P1. If using WFQ scheduling, N equals P3+P2+P1. Each drop level from one to three has defined

In KB (kilobytes)

P3

P2

P1

High Drop

Low Drop

Level 1

N

≥

120

P3

≥

AKB

P2

≥

AKB

P1

≥

AKB

X%

0%

Level 2

N

≥

140

Y%

Z%

Level 3

N

≥

160

100%

100%

相關(guān)PDF資料 |

PDF描述 |

|---|---|

| MVTX2601AG | Unmanaged 24-Port 10/100 Mbps Ethernet Switch |

| MVTX2602 | Managed 24 Port 10/100 Mbps Ethernet Switch |

| MVTX2602AG | Managed 24 Port 10/100 Mbps Ethernet Switch |

| MVTX2603 | Unmanaged 24-Port 10/100 Mb + 2-Port 1 Gb Ethernet Switch |

| MVTX2603AG | Unmanaged 24-Port 10/100 Mb + 2-Port 1 Gb Ethernet Switch |

相關(guān)代理商/技術(shù)參數(shù) |

參數(shù)描述 |

|---|---|

| MVTX2601A | 制造商:未知廠家 制造商全稱:未知廠家 功能描述:Unmanaged 24 port 10/100Mb Ethernet switch |

| MVTX2601AG | 制造商:ZARLINK 制造商全稱:Zarlink Semiconductor Inc 功能描述:Unmanaged 24-Port 10/100 Mbps Ethernet Switch |

| MVTX2601AG2 | 制造商:Microsemi Corporation 功能描述: |

| MVTX2602 | 制造商:ZARLINK 制造商全稱:Zarlink Semiconductor Inc 功能描述:Managed 24 Port 10/100 Mbps Ethernet Switch |

| MVTX2602A | 制造商:未知廠家 制造商全稱:未知廠家 功能描述:MVTX260x Port Mirroring |

發(fā)布緊急采購,3分鐘左右您將得到回復(fù)。